Policy Evaluation with Python

Workflow

As a data analyst, it is absolutely essential that you keep detailed documentation of your analysis process. This means saving all of the code that you use for a project somewhere convenient so that you or anyone else can quickly and easily exactly reproduce your original results. In addition to saving your code, you should also provide explanations for your procedures and code so that you will always remember your data sources, what each line of code is doing, and why you analyzed the data the way that you did. Not only will this help you remember the details about your project, but it will also make it easy for others to read through your code and validate your methods. Practically, your code should be saved in a script file, though I strongly recommend using a markdown document since it allows you to create documents with written prose and code at the same time, exactly like this guide!

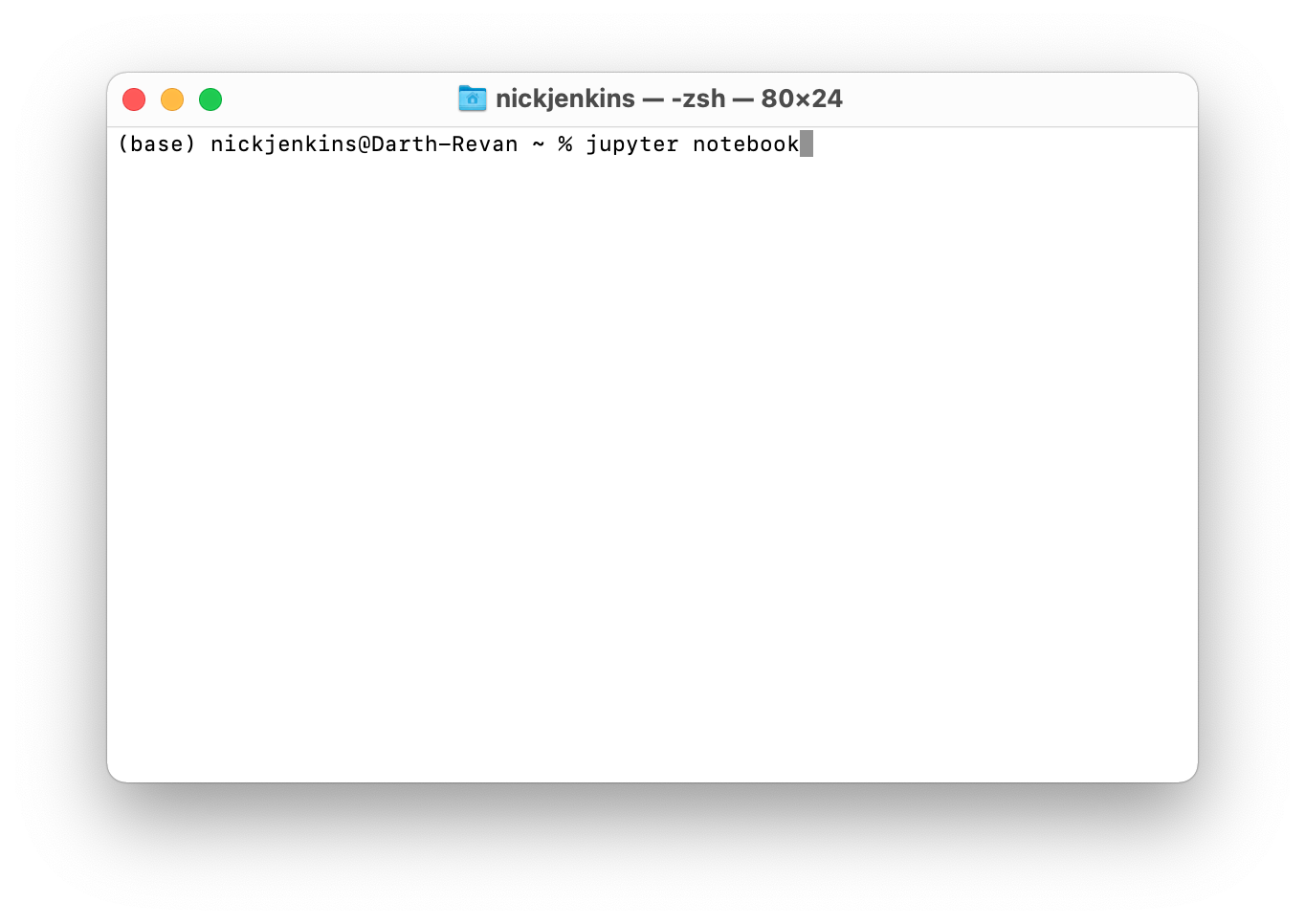

To create a Jupyter Notebook, open Terminal on MacOS or the Anaconda Prompt on Windows and type jupyter notebook (there are many different ways to create a Jupyter notebook).

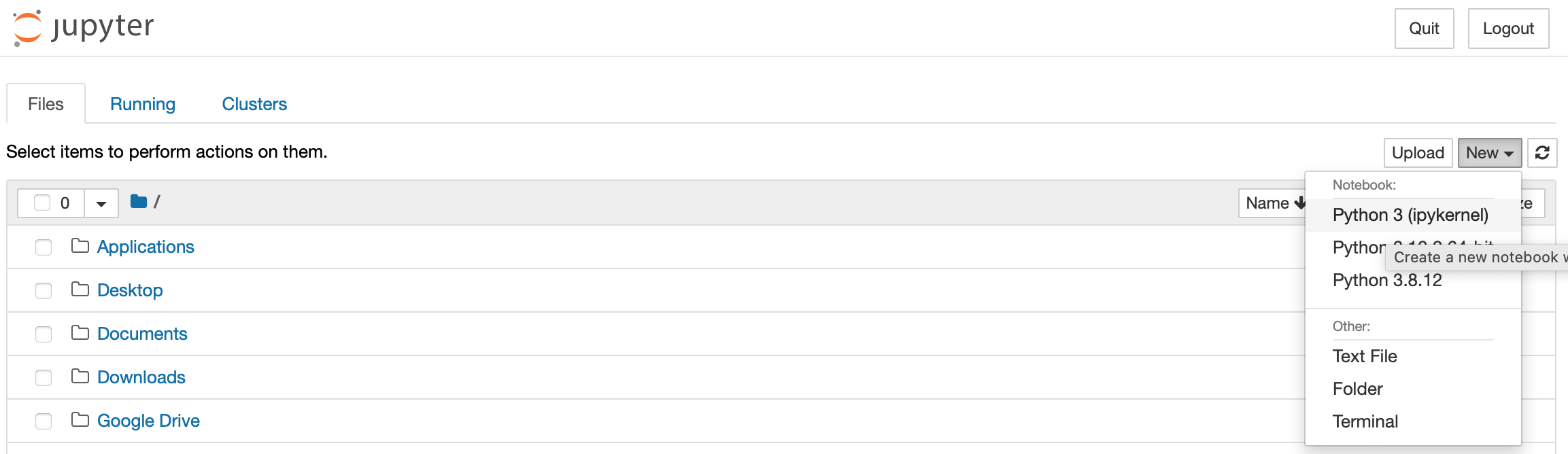

After running this command, your browser will open with an instance of Jupyter. From there, click on NEW then select the Python 3 kernel.

Step 1: Get the Policy Data

About the dataset

The Correlates of State Policy Project includes more than 900 variables, with observations across the 50 U.S. states and across time (1900–2016, approximately). These variables represent policy outputs or political, social, or economic factors that may influence policy differences. The codebook includes the variable name, a short description of the variable, the variable time frame, a longer description of the variable, and the variable source(s) and notes.

Begin by downloading the Correlates of State Policy Project codebook. After the file is downloaded, open it and review the table of contents. The table of contents simply lists the categories of variables available in the data. Scrolling down to page 3, we see examples of types of data (or variables) that are available under each category:

Unit of Analysis and Data Type

Before you begin thinking about your research question, you first need to identify what type of data the Correlates of State Policy Project are and what the unit of analysis is. Does the data span multiple years or is it just a single year? Do the variables contain data on individuals, ZIP codes, counties, Congressional districts, states, countries, …? This is an essential first step because the answers to these questions will narrow the range of research questions that you can answer.

For example, suppose we were interested in knowing if going to college leads to increases in pay rates. In addition to a research design that would allow us to identify this causal effect, we would need data on individual people; whether or not they have a college degree and what their pay rates are. With this data, the unit of analysis would be the individual-level.

Let’s suppose we have this data. Now we need to decide if we need cross-sectional data, or panel data. Cross-sectional data are data that only contain a single point in time. An example of this would be a survey of a randomly sampled respondents in year, say, 2020. Panel data (also known as longitudinal data) contains data on the same variables and units across multiple years. An example of this would be if we surveyed the same individuals every year for 5 years. Thus, we would have multiple measurements of a variable for the same individual over multiple time points. Note that we don’t need data on individuals to construct a panel dataset. We could also have a panel of, say, the GDP of all 50 states over a 10 year period.

What if we have data for multiple years, but the variables aren’t measuring the same individuals? In this case, we would have a pooled-cross-section. For example, the General Social Survey (GSS) conducts surveys of a random sample of individuals every year, but they don’t survey the same individuals. This means that we cannot compare the values of a variable in one year to the values of the same variable in another year, since each year is measuring a different person.

Getting back to the Correlates of State Policy Project data, we can see in the “About the Correlates of State Policy Project” section that the data measures all 50 states from 1900 to 2018. Thus, the unit of analysis is at the state-level and because it measures states (the same states) over time, it is a panel dataset. This should orient our research question to one about state-level outcomes and programs.

Research Question and Theory

In this guide, I will consider the following research question:

Do policies that ban financial incentives for doctors to preform less costly procedures and prescribe less costly drugs affect per capita state spending on health care expenditures?

In addition to a precise research question, you also need to develop an explanation for why you think these two variables are related. For my research question, my theory might be that incentivising doctors to preform less costly procedures and prescribe less costly drugs will make them more likely to do these things which will ultimately lead to a reduction in, both private and public, health care expenditures.

Selecting Variables

In deciding which variables we need to answer our research question, we need to consider three different categories of variables: the outcome variable, the predictor of interest (usually the policy intervention or treatment), and controls.

The Outcome and Predictor of Interest

For my question, policies banning financial incentives for doctors will be my predictor of interest (or treatment) and state health care expenditures will be my outcome. Here are the descriptions given to each of these variables in the codebook:

| Variable Name | Variable Description | Variable Coding | Dates Available |

|---|---|---|---|

banfaninc |

Did state adopt a ban on financial incentives for doctors to perform less costly procedures/prescribe less costly drugs? | 0 = no, 1 = yes | 1996 - 2001 |

healthspendpc |

Health care expenditures per capita (in dollars), measuring spending for all privately and publicly funded personal health care services and products (hospital care, physician services, nursing home care, prescription drugs, etc.) by state of residence. | in dollars | 1991 - 2009 |

There are a few things to note about this information. First, data are available for both of these variables from 1996 to 2001. If the years of collection for these variables didn’t overlap, then we wouldn’t be able to use them to answer our research question. Second, banfaninc is an indicator variable (also known as a dummy variable) that simply indicates a binary, “yes” “no” status. Conversely, healthspendpc is continuous meaning that it could be any positive number (since it is measured in dollars). Note that when working with money as a variable, it is important to control for inflation!

Controls

Now that we have the outcome and treatment, we need to think about what controls we need. The important criterion for identifying control variables is to pick variables that affect both the treatment and outcome.

For my question I will need to identify variables that affect policies that prohibit financial incentives and affect a state’s health care spending per capita. Here are some examples:

| Variable Name | Variable Description | Variable Coding | Dates Available |

|---|---|---|---|

sen_cont_alt |

Party in control of state senate | 1 = Democrats, 0 = Republicans, .5 = split | 1937 – 2011 |

hs_cont_alt |

Party in control of state house | 1 = Democrats, 0 = Republicans, .5 = split | 1937 – 2011 |

hou_chamber |

State house ideological median | Scale is -1 (liberal) to +1 (conservative) | 1993 - 2016 |

sen_chamber |

State senate ideological median | Scale is -1 (liberal) to +1 (conservative) | 1993 - 2016 |

Perhaps Democrats are more likely to regulate the health care industry including policies about prescription drugs and they are also more likely than Republicans to allocate public funds for health care.

Primary Keys

Finally, in addition to your outcome, predictor of interest, and control variables, you need to keep variables that uniquely identify each row of data. For this dataset, this will be the state and the year. This means that the level of data we have is “state-year” because each row of data contains measures for a given state and a given year. This also means that the state and year variables combine uniquely identify each row of data.

Now that we know what variables we need, we can start working with the data. Download the .csv (linked here) file containing the data and save it to your project’s folder.

Step 2: Prepare the Data for Analysis

Preparing your data for analysis is easily the most time-consuming component of any project and it’s often referred to as “data cleaning,” “data wrangling,” or “data munging.” It is essential that we know the data inside and out: what the unit of analysis is, what the variables we need are measuring, how many missing values we have and where they come from, what the distributions of the variables look like, etc. Without such a detailed understanding, we could easily reach flawed conclusions, build bad statistical models, or otherwise produce errors in our analysis.

Importing Data

Now, let’s get the data loaded into Python and start cleaning. To do so, we’ll use the pandas library, which you should already have some familiarity with.

import pandas as pd

policy_data_raw = pd.read_csv("correlatesofstatepolicyprojectv2.csv")

policy_data_raw.head()

/Users/nickjenkins/opt/anaconda3/lib/python3.9/site-packages/IPython/core/interactiveshell.py:3444: DtypeWarning: Columns (36,485,495,496,497,498,499,500,501,502,505,511,513,516,518,521,522,525,532,537,539,541,543,544,671,843,844,1087,1093,1124,1318,1513,1619,1620,1890) have mixed types.Specify dtype option on import or set low_memory=False.

exec(code_obj, self.user_global_ns, self.user_ns)

| year | st | stateno | state | state_fips | state_icpsr | poptotal | popdensity | popfemale | pctpopfemale | ... | nwcirr | regulation_boehmke_cogrowman | regulation_boehmke_livingwill | regulation_housing_directstateai | regulation_housing_enabling_federal_aid | regulation_rent_control | regulation_rfra | regulation_sedition_laws | regulations_lemonlaw | regulations_state_debt_limitatio | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1900 | AL | 1.0 | Alabama | 1 | 41 | 1830000.0 | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 1 | 1900 | AK | 2.0 | Alaska | 2 | 81 | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 2 | 1900 | AZ | 3.0 | Arizona | 4 | 61 | 124000.0 | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 3 | 1900 | AR | 4.0 | Arkansas | 5 | 42 | 1314000.0 | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 4 | 1900 | CA | 5.0 | California | 6 | 71 | 1490000.0 | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

5 rows × 2091 columns

When importing your data for the first time, it’s a good idea to save the original data to an object that denotes an unchanged file. I usually do this by adding the suffix _raw to the data object and and changes that I make to the data to clean it will be saved in a different object. That way I can always get back to the original data if I need to without re-importing it.

Although we are working with a .csv file for this tutorial, that won’t always be the case. Luckily pandas has you covered. Here is a table of pandas functions to read in other data types:

| File Type | Python Function |

|---|---|

| CSV | pandas.read_csv |

Excel (.xls & .xlsx) |

pandas.read_excel |

| SPSS | pandas.read_spss |

| Stata | pandas.read_stata |

| SAS | pandas.read_sas |

| Feather | pandas.read_feather |

There are a lot of variables in this dataset.

# number of columns

policy_data_raw.shape[1]

# number of rows

policy_data_raw.shape[0]

6120

There are 2,091 variables (assuming each column is one variable)! Let’s make this more manageable by selecting only the variables we actually care about. We’ll do this by indexing the DataFrame with the columns that we want:

policy_data = policy_data_raw[["year", "state", "healthspendpc", "banfaninc", "sen_cont_alt", "hs_cont_alt",

"hou_chamber", "sen_chamber"]]

policy_data.head()

| year | state | healthspendpc | banfaninc | sen_cont_alt | hs_cont_alt | hou_chamber | sen_chamber | |

|---|---|---|---|---|---|---|---|---|

| 0 | 1900 | Alabama | NaN | NaN | NaN | NaN | NaN | NaN |

| 1 | 1900 | Alaska | NaN | NaN | NaN | NaN | NaN | NaN |

| 2 | 1900 | Arizona | NaN | NaN | NaN | NaN | NaN | NaN |

| 3 | 1900 | Arkansas | NaN | NaN | NaN | NaN | NaN | NaN |

| 4 | 1900 | California | NaN | NaN | NaN | NaN | NaN | NaN |

Now that our data is in a more manageable size, we can start to think more critically about it. One of the first things we want to know is if the data is in a usable format. What is a usable format? Well, we want it to know what is known as “tidy.” The concept of tidy data is a philosophy of data first explained in an article in the Journal of Statistical Software by Hadley Wickham called Tidy Data. The tidy data philosophy has three components:

- Each variable has its own column.

- Each observation has its own row.

- Each value has its own cell.

Visually, these three principles look like this:

There is a lot more to be said about tidy data and I encourage you to read the article or Chapter 12 of R for Data Science.

Reflect:

Does our data meet the three conditions of tidy data? Take a minute to think about it.

I say yes. This data looks good. If it wasn’t, we might need to think about how to reshape our data.

Missing Data

Missing data present a challenge to data scientists because they can influence analyses. When we import a dataset that has empty cells, R will automatically populate these cells with NA which means missing. These are known as explicit missing values values because we can see them in the data. When you go to create a plot or estimate a regression, or anything else, these values will be dropped from the dataset. That’s not necessarily a bad thing, but it’s important to know that it’s happening.

The more problematic situation is when missing data is represented with a numeric value like -99 instead of simply having an empty cell. In that case, R won’t know that -99 is a missing value - it will just think that it’s a legitimate value. If you were to run your regression with the -99s in the data, it would seriously influence your results. Before making any inferences with our data, we need to make sure we don’t have any values like this in the dataset!

If we knew about values like this ahead of time, we could fix them with the na_values argument of the pd.read_csv() function. Usually the codebook would tell us how missing values are coded. If there were coded as -99, we could fix them on import with:

data_raw = pd.read_csv("correlatesofstatepolicyprojectv2_2.csv", na_values = -99)

Looking through the codebook, I don’t see any mention of a missing value coding scheme, so we’ll need to look for unusual values in the data with some descriptive statistics:

policy_data.describe()

| year | healthspendpc | banfaninc | sen_cont_alt | hs_cont_alt | hou_chamber | sen_chamber | |

|---|---|---|---|---|---|---|---|

| count | 6120.000000 | 969.000000 | 4900.000000 | 3638.000000 | 3639.000000 | 1007.000000 | 1018.000000 |

| mean | 1959.500000 | 4513.422631 | 0.078980 | 0.570093 | 0.606760 | 0.067393 | 0.069445 |

| std | 34.642644 | 1567.088257 | 0.269734 | 0.489827 | 0.485149 | 0.616347 | 0.602112 |

| min | 1900.000000 | 75.529700 | 0.000000 | 0.000000 | 0.000000 | -1.465000 | -1.449000 |

| 25% | 1929.750000 | 3198.000000 | 0.000000 | 0.000000 | 0.000000 | -0.462500 | -0.462250 |

| 50% | 1959.500000 | 4198.000000 | 0.000000 | 1.000000 | 1.000000 | 0.195000 | 0.195000 |

| 75% | 1989.250000 | 5683.000000 | 0.000000 | 1.000000 | 1.000000 | 0.605000 | 0.613000 |

| max | 2019.000000 | 10349.000000 | 1.000000 | 1.000000 | 1.000000 | 1.228000 | 1.242000 |

The minimums and maximums all look reasonable here so I think we’re ok. It would also be a good idea to create some plots as another way to check for unusual values.

The other type of missing values to be aware of are “implicitly” missing values. To get a sense of what an implicit missing value is, take a look at this example DataFrame:

df = pd.DataFrame(

{"state": ["CA", "CA", "AZ", "AZ", "TX"],

"year": [2000, 2001, 2000, 2001, 2000],

"enrollments": [1521, 1231, 3200, 2785, 6731]}

)

df.head()

| state | year | enrollments | |

|---|---|---|---|

| 0 | CA | 2000 | 1521 |

| 1 | CA | 2001 | 1231 |

| 2 | AZ | 2000 | 3200 |

| 3 | AZ | 2001 | 2785 |

| 4 | TX | 2000 | 6731 |

What do you notice about Texas (TX)? It only has data for 2000. Why don’t we have data for 2001? Well, I don’t know! And that’s the problem. I could be random, but it might not be. Moreover, because the row for Texas in the year 2001 doesn’t exist in the data it’s technically missing even though we don’t see a NaN value that represents it. That’s why its implicitly, not explicitly, missing.

We can make implicitly missing values explicit in Python with the .complete() method from pyjanitor:

import janitor

df.complete("state", "year")

| state | year | enrollments | |

|---|---|---|---|

| 0 | CA | 2000 | 1521.0 |

| 1 | CA | 2001 | 1231.0 |

| 2 | AZ | 2000 | 3200.0 |

| 3 | AZ | 2001 | 2785.0 |

| 4 | TX | 2000 | 6731.0 |

| 5 | TX | 2001 | NaN |

Getting back to our data, we’ve seen that there aren’t any extreme values, but which columns have missing values? We can check with the .isnull() and .any() methods:

policy_data.isnull().any()

year False

state False

healthspendpc True

banfaninc True

sen_cont_alt True

hs_cont_alt True

hou_chamber True

sen_chamber True

dtype: bool

We would also want to check how many missing values there are in each column:

policy_data.isnull().sum()

year 0

state 0

healthspendpc 5151

banfaninc 1220

sen_cont_alt 2482

hs_cont_alt 2481

hou_chamber 5113

sen_chamber 5102

dtype: int64

To check for any implicitly missing values, we can count how many years each state has. If all the missing values are explicit, then there should be the same number of rows for each state. We can check by chaining multiple methods together like this:

policy_data.groupby(["state"]).agg("count")["year"]

state

Alabama 120

Alaska 120

Arizona 120

Arkansas 120

California 120

Colorado 120

Connecticut 120

Delaware 120

District of Columbia 120

Florida 120

Georgia 120

Hawaii 120

Idaho 120

Illinois 120

Indiana 120

Iowa 120

Kansas 120

Kentucky 120

Louisiana 120

Maine 120

Maryland 120

Massachusetts 120

Michigan 120

Minnesota 120

Mississippi 120

Missouri 120

Montana 120

Nebraska 120

Nevada 120

New Hampshire 120

New Jersey 120

New Mexico 120

New York 120

North Carolina 120

North Dakota 120

Ohio 120

Oklahoma 120

Oregon 120

Pennsylvania 120

Rhode Island 120

South Carolina 120

South Dakota 120

Tennessee 120

Texas 120

Utah 120

Vermont 120

Virginia 120

Washington 120

West Virginia 120

Wisconsin 120

Wyoming 120

Name: year, dtype: int64

What should we do with missing values?

There really isn’t a “right” way to handle missing values, so you just need to think carefully about whatever decision you make. The solution that we’ll use here is just to drop all missing values. Why? Missing values can’t be put in a plot or used in a regression, so we don’t necessarily need them. Theoretically, missing values are important because it could mean something more that just “no data.” For example, data might be systematically missing for a particular group of people. That would definitely affect our analyses and we would want to address it. That’s a very different situation than having a missing value for a random, non-systematic reason.

Let’s assume that we’ve spent time thinking about why our data is missing and we have decided that dropping the missing values from our dataset is the best option. We can do that with the .dropna() method:

policy_data = policy_data.dropna()

However, it is a much better idea to only drop rows that are missing the outcome variable. Doing so will ensure that we keep the maximum amount of usable data.

policy_data = policy_data.dropna(subset = ["healthspendpc"])

After dropping NaN values, it’s a good idea to check that it worked:

policy_data.isnull().any()

year False

state False

healthspendpc False

banfaninc False

sen_cont_alt False

hs_cont_alt False

hou_chamber False

sen_chamber False

dtype: bool

Renaming Variables

With the missing values out of the way, let’s turn to assigning more informative names to our variables. The variable names in our dataset aren’t too bad, but they could be better. It’s fairly common to run into cryptic variable names like CMP23A__1 when working with data and names like that only make it harder for you, as the data scientist, and anyone else trying to understand the data. In those situations it a good idea to give your variables names that are more easily understood.

There are some rules (more like guidelines) for variable names, however. First, we never want to have spaces. So, Senate Chamber would be a bad name because of the space. Spaces just make it harder to work with. Instead, we use _ instead of a space. Second, variables are easier to work with when they are all lower case. Python is a case sensitive language. That means that it sees x as different from X. So, instead of Senate_Chamber, we’ll just use senate_chamber. Finally, we want to try not to have variable names that are too long. Sometimes it’s hard to use short names when the variables are complicated, but do your best. Working with senate_chamber_california_legislative_session_2022 is a bit cumbersome to type out.

Reflect:

What does the

sen_chambervariable measure?

If you had to go look it up, it probably needs a new name! To rename variables in pandas we use the .rename() method where we set the old name equal to the new name like this:

policy_data = policy_data.rename(columns = {"healthspendpc": "health_spend_per_cap",

"banfaninc": "fin_incentive_ban",

"sen_cont_alt": "senate_prty_control",

"hs_cont_alt": "house_prty_control",

"hou_chamber": "house_ideology",

"sen_chamber": "senate_ideology"})

policy_data.head()

| year | state | health_spend_per_cap | fin_incentive_ban | senate_prty_control | house_prty_control | house_ideology | senate_ideology | |

|---|---|---|---|---|---|---|---|---|

| 4746 | 1993 | Arkansas | 2609.0 | 0.0 | 1.0 | 1.0 | 0.182 | 0.064 |

| 4747 | 1993 | California | 2948.0 | 0.0 | 1.0 | 1.0 | -0.968 | -0.720 |

| 4753 | 1993 | Georgia | 2867.0 | 0.0 | 1.0 | 1.0 | -0.187 | -0.270 |

| 4758 | 1993 | Iowa | 2837.0 | 0.0 | 1.0 | 0.0 | 0.102 | -0.476 |

| 4783 | 1993 | South Carolina | 2612.0 | 0.0 | 1.0 | 1.0 | 0.214 | 0.052 |

Variable Types

Python allows for different types of data and it’s important to ensure that our variables have the right types. There are three main types that you will use as a data scientist:

- numeric: standard numeric data with some definied level of precision

- strings: character data like words or sentences (these are listed as objects in Python)

- categorical: data that has discrete categories, can be ordered or unordered

To check what types of variables we have in a DataFrame, we use the .dtypes() method:

policy_data.dtypes

year int64

state object

health_spend_per_cap float64

fin_incentive_ban float64

senate_prty_control float64

house_prty_control float64

house_ideology float64

senate_ideology float64

dtype: object

Let’s see if these data types make sense:

year:int64which is a numeric type so that’s goodstate:objectwhich indicates a stringhealth_spend_per_cap:float64which is another numeric type

These all seem good. But, fin_incentive_ban, senate_prty_control, and house_prty_control probably shouldn’t be a continuous numeric type like float64 because they are categories. Does the state have a financial incentive ban (fin_incentive_ban)? Yes or no? Who control the senate for a given year (senate_prty_control)? The Democrats or Republicans? So, these should be categorical data. We can change the type with the mutate function which is used to create new variables. Essentially, we are replacing the old fin_incentive_ban with a new categorical version of fin_incentive_ban:

policy_data = policy_data.assign(fin_incentive_ban = pd.Categorical(policy_data["fin_incentive_ban"]))

Now, if we check the data types again, we will see the fin_incentive_ban is now a categorical data type with category values of either 0 or 1:

policy_data.dtypes

year int64

state object

health_spend_per_cap float64

fin_incentive_ban category

senate_prty_control float64

house_prty_control float64

house_ideology float64

senate_ideology float64

dtype: object

Creating and Manipulating Variables

Alright, that looks good but we can make it better. What if instead of saying either 0 or 1, we had it say “Yes” or “No”? That would make the data easier to understand. Essentially, what we want to do is change the names of the categories. Luckily pandas has a method for that! It’s called .rename_categories(). Let’s see how it works.

policy_data

| year | state | health_spend_per_cap | fin_incentive_ban | senate_prty_control | house_prty_control | house_ideology | senate_ideology | |

|---|---|---|---|---|---|---|---|---|

| 4746 | 1993 | Arkansas | 2609.0 | 0.0 | 1.0 | 1.0 | 0.182 | 0.064 |

| 4747 | 1993 | California | 2948.0 | 0.0 | 1.0 | 1.0 | -0.968 | -0.720 |

| 4753 | 1993 | Georgia | 2867.0 | 0.0 | 1.0 | 1.0 | -0.187 | -0.270 |

| 4758 | 1993 | Iowa | 2837.0 | 0.0 | 1.0 | 0.0 | 0.102 | -0.476 |

| 4783 | 1993 | South Carolina | 2612.0 | 0.0 | 1.0 | 1.0 | 0.214 | 0.052 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 5602 | 2009 | Texas | 5924.0 | 1.0 | 0.0 | 0.0 | 0.566 | 0.779 |

| 5604 | 2009 | Vermont | 7635.0 | 1.0 | 1.0 | 1.0 | -0.754 | -0.721 |

| 5606 | 2009 | Washington | 6782.0 | 0.0 | 1.0 | 1.0 | -0.794 | -0.623 |

| 5608 | 2009 | Wisconsin | 7233.0 | 0.0 | 1.0 | 1.0 | -0.798 | -0.880 |

| 5609 | 2009 | Wyoming | 7040.0 | 0.0 | 0.0 | 0.0 | 0.304 | 0.353 |

687 rows × 8 columns

policy_data["fin_incentive_ban"] = policy_data["fin_incentive_ban"].cat.rename_categories({0: "No", 1: "Yes"})

policy_data

| year | state | health_spend_per_cap | fin_incentive_ban | senate_prty_control | house_prty_control | house_ideology | senate_ideology | |

|---|---|---|---|---|---|---|---|---|

| 4746 | 1993 | Arkansas | 2609.0 | No | 1.0 | 1.0 | 0.182 | 0.064 |

| 4747 | 1993 | California | 2948.0 | No | 1.0 | 1.0 | -0.968 | -0.720 |

| 4753 | 1993 | Georgia | 2867.0 | No | 1.0 | 1.0 | -0.187 | -0.270 |

| 4758 | 1993 | Iowa | 2837.0 | No | 1.0 | 0.0 | 0.102 | -0.476 |

| 4783 | 1993 | South Carolina | 2612.0 | No | 1.0 | 1.0 | 0.214 | 0.052 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 5602 | 2009 | Texas | 5924.0 | Yes | 0.0 | 0.0 | 0.566 | 0.779 |

| 5604 | 2009 | Vermont | 7635.0 | Yes | 1.0 | 1.0 | -0.754 | -0.721 |

| 5606 | 2009 | Washington | 6782.0 | No | 1.0 | 1.0 | -0.794 | -0.623 |

| 5608 | 2009 | Wisconsin | 7233.0 | No | 1.0 | 1.0 | -0.798 | -0.880 |

| 5609 | 2009 | Wyoming | 7040.0 | No | 0.0 | 0.0 | 0.304 | 0.353 |

687 rows × 8 columns

That’s much easier to understand. Still skeptical that labeling the values makes it easier? Pause and think about what a value of 1 means for senate_prty_control? Did you have to look it up? I know I did! If we had the value labeled, we wouldn’t need to look it up.

Now let’s use these tools to clean up the other categorical variables (senate_prty_control and house_prty_control). Before we do though, we need to check how many categories there are in these variables:

policy_data["senate_prty_control"].unique()

policy_data["house_prty_control"].unique()

array([1. , 0. , 0.5])

Ok, now let’s look in the codebook to figure out what these values mean and label them appropriately.

policy_data = policy_data.assign(senate_prty_control = pd.Categorical(policy_data["senate_prty_control"]),

house_prty_control = pd.Categorical(policy_data["house_prty_control"]))

policy_data["senate_prty_control"] = policy_data["senate_prty_control"].cat.rename_categories({0: "Republican",

0.5: "Split",

1: "Democrat"})

policy_data["house_prty_control"] = policy_data["house_prty_control"].cat.rename_categories({0: "Republican",

0.5: "Split",

1: "Democrat"})

policy_data

| year | state | health_spend_per_cap | fin_incentive_ban | senate_prty_control | house_prty_control | house_ideology | senate_ideology | |

|---|---|---|---|---|---|---|---|---|

| 4746 | 1993 | Arkansas | 2609.0 | No | Democrat | Democrat | 0.182 | 0.064 |

| 4747 | 1993 | California | 2948.0 | No | Democrat | Democrat | -0.968 | -0.720 |

| 4753 | 1993 | Georgia | 2867.0 | No | Democrat | Democrat | -0.187 | -0.270 |

| 4758 | 1993 | Iowa | 2837.0 | No | Democrat | Republican | 0.102 | -0.476 |

| 4783 | 1993 | South Carolina | 2612.0 | No | Democrat | Democrat | 0.214 | 0.052 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 5602 | 2009 | Texas | 5924.0 | Yes | Republican | Republican | 0.566 | 0.779 |

| 5604 | 2009 | Vermont | 7635.0 | Yes | Democrat | Democrat | -0.754 | -0.721 |

| 5606 | 2009 | Washington | 6782.0 | No | Democrat | Democrat | -0.794 | -0.623 |

| 5608 | 2009 | Wisconsin | 7233.0 | No | Democrat | Democrat | -0.798 | -0.880 |

| 5609 | 2009 | Wyoming | 7040.0 | No | Republican | Republican | 0.304 | 0.353 |

687 rows × 8 columns

Perfect.

Sometimes when we have categorical data, we would rather work with a single value of the category instead of every possible category. An easy way to accomplish this is to create an indicator, or dummry, variable. For example, suppose we just wanted to know of the Senate was controlled by the Democrats in a given year. To do this, we could create an indicator variable equal to 1 if senate_prty_control equals “Democrat”. The NumPy library provides an easy way to do this with the .where() method:

import numpy as np

policy_data.assign(senate_dem_control = np.where(policy_data["senate_prty_control"] == "Democrat", 1, 0))

| year | state | health_spend_per_cap | fin_incentive_ban | senate_prty_control | house_prty_control | house_ideology | senate_ideology | senate_dem_control | |

|---|---|---|---|---|---|---|---|---|---|

| 4746 | 1993 | Arkansas | 2609.0 | No | Democrat | Democrat | 0.182 | 0.064 | 1 |

| 4747 | 1993 | California | 2948.0 | No | Democrat | Democrat | -0.968 | -0.720 | 1 |

| 4753 | 1993 | Georgia | 2867.0 | No | Democrat | Democrat | -0.187 | -0.270 | 1 |

| 4758 | 1993 | Iowa | 2837.0 | No | Democrat | Republican | 0.102 | -0.476 | 1 |

| 4783 | 1993 | South Carolina | 2612.0 | No | Democrat | Democrat | 0.214 | 0.052 | 1 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 5602 | 2009 | Texas | 5924.0 | Yes | Republican | Republican | 0.566 | 0.779 | 0 |

| 5604 | 2009 | Vermont | 7635.0 | Yes | Democrat | Democrat | -0.754 | -0.721 | 1 |

| 5606 | 2009 | Washington | 6782.0 | No | Democrat | Democrat | -0.794 | -0.623 | 1 |

| 5608 | 2009 | Wisconsin | 7233.0 | No | Democrat | Democrat | -0.798 | -0.880 | 1 |

| 5609 | 2009 | Wyoming | 7040.0 | No | Republican | Republican | 0.304 | 0.353 | 0 |

687 rows × 9 columns

The dataset is looking really good, but there is one last thing to do. Because we are going to be using spending on health care as a variable in our model, we need to adjust it for inflation. In order to do that we need data from the Consumer Price Index and the formula:

To get the CPI data, we’ll use the CPI library. You’ll need to install it with pip install cpi or conda install cpi in your command line prompt. How much is $100 in 1950 worth today?

import cpi

cpi.inflate(value = 1, year_or_month = 1950)

11.243568464730291

What if you bought a hamburger for $0.25 in 1943 (the price of a McDonald’s hamburger and fries at the time)?

cpi.inflate(value = 0.25, year_or_month = 1943)

3.9157514450867055

Ok, let’s use cpi.inflate() to adjust the dollar values in our data and use 1996 and the year to adjust to because that’s the first year of our treatment. Note that we’ll need to use the .apply() method which applies a function to every row of a column with the axis = 1 argument:

policy_data["real_health_spend_per_cap"] = policy_data.apply(

lambda x: cpi.inflate(x.health_spend_per_cap, x.year, to=1996), axis = 1

)

policy_data

| year | state | health_spend_per_cap | fin_incentive_ban | senate_prty_control | house_prty_control | house_ideology | senate_ideology | real_health_spend_per_cap | |

|---|---|---|---|---|---|---|---|---|---|

| 4746 | 1993 | Arkansas | 2609.0 | No | Democrat | Democrat | 0.182 | 0.064 | 2832.886505 |

| 4747 | 1993 | California | 2948.0 | No | Democrat | Democrat | -0.968 | -0.720 | 3200.977163 |

| 4753 | 1993 | Georgia | 2867.0 | No | Democrat | Democrat | -0.187 | -0.270 | 3113.026298 |

| 4758 | 1993 | Iowa | 2837.0 | No | Democrat | Republican | 0.102 | -0.476 | 3080.451903 |

| 4783 | 1993 | South Carolina | 2612.0 | No | Democrat | Democrat | 0.214 | 0.052 | 2836.143945 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 5602 | 2009 | Texas | 5924.0 | Yes | Republican | Republican | 0.566 | 0.779 | 4332.472254 |

| 5604 | 2009 | Vermont | 7635.0 | Yes | Democrat | Democrat | -0.754 | -0.721 | 5583.799065 |

| 5606 | 2009 | Washington | 6782.0 | No | Democrat | Democrat | -0.794 | -0.623 | 4959.964016 |

| 5608 | 2009 | Wisconsin | 7233.0 | No | Democrat | Democrat | -0.798 | -0.880 | 5289.799429 |

| 5609 | 2009 | Wyoming | 7040.0 | No | Republican | Republican | 0.304 | 0.353 | 5148.650349 |

687 rows × 9 columns

Finally, when working with data that is strictly positive and continuous it is a good idea to use a

policy_data = policy_data.assign(log_health_spend_cap_adj = np.log(policy_data["real_health_spend_per_cap"]))

policy_data

| year | state | health_spend_per_cap | fin_incentive_ban | senate_prty_control | house_prty_control | house_ideology | senate_ideology | real_health_spend_per_cap | log_health_spend_cap_adj | |

|---|---|---|---|---|---|---|---|---|---|---|

| 4746 | 1993 | Arkansas | 2609.0 | No | Democrat | Democrat | 0.182 | 0.064 | 2832.886505 | 7.949051 |

| 4747 | 1993 | California | 2948.0 | No | Democrat | Democrat | -0.968 | -0.720 | 3200.977163 | 8.071211 |

| 4753 | 1993 | Georgia | 2867.0 | No | Democrat | Democrat | -0.187 | -0.270 | 3113.026298 | 8.043351 |

| 4758 | 1993 | Iowa | 2837.0 | No | Democrat | Republican | 0.102 | -0.476 | 3080.451903 | 8.032832 |

| 4783 | 1993 | South Carolina | 2612.0 | No | Democrat | Democrat | 0.214 | 0.052 | 2836.143945 | 7.950201 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 5602 | 2009 | Texas | 5924.0 | Yes | Republican | Republican | 0.566 | 0.779 | 4332.472254 | 8.373894 |

| 5604 | 2009 | Vermont | 7635.0 | Yes | Democrat | Democrat | -0.754 | -0.721 | 5583.799065 | 8.627625 |

| 5606 | 2009 | Washington | 6782.0 | No | Democrat | Democrat | -0.794 | -0.623 | 4959.964016 | 8.509154 |

| 5608 | 2009 | Wisconsin | 7233.0 | No | Democrat | Democrat | -0.798 | -0.880 | 5289.799429 | 8.573536 |

| 5609 | 2009 | Wyoming | 7040.0 | No | Republican | Republican | 0.304 | 0.353 | 5148.650349 | 8.546490 |

687 rows × 10 columns

Going Deeper: Data Cleaning in a Real Workflow

We did a lot to clean this data and we took our time doing. Obviously, we went slow so that you could learn each step and think critically about the code. In a real workflow, however, there’s a better way to write code than taking each step one-at-a-time and it’s referred to as method chaining. Method chaining is a way of writing code where we add multiple steps together, instead of writing each step individually. Here is what my code would like if I were doing these steps for an actual project:

# import libraries

import pandas as pd

import numpy as np

# import data

policy_data_raw = pd.read_csv("correlatesofstatepolicyprojectv2.csv")

# select variables, convert types, drop missing

policy_data = policy_data_raw \

.loc[:, ["year", "state", "healthspendpc", "banfaninc", "sen_cont_alt",

"hs_cont_alt", "hou_chamber", "sen_chamber"]] \

.rename(columns = {"healthspendpc": "health_spend_per_cap",

"banfaninc": "fin_incentive_ban",

"sen_cont_alt": "senate_prty_control",

"hs_cont_alt": "house_prty_control",

"hou_chamber": "house_ideology",

"sen_chamber": "senate_ideology"}) \

.assign(fin_incentive_ban = lambda x: pd.Categorical(x.fin_incentive_ban),

senate_prty_control = lambda x: pd.Categorical(x.senate_prty_control),

house_prty_control = lambda x: pd.Categorical(x.house_prty_control),

senate_dem_control = lambda x: np.where(x.senate_prty_control == "Democrat", 1, 0)) \

.assign(senate_prty_control = lambda x: x.senate_prty_control.cat.rename_categories({0:"Republican", 0.5: "Split", 1: "Democrat"}),

house_prty_control = lambda x: x.house_prty_control.cat.rename_categories({0:"Republican", 0.5: "Split", 1: "Democrat"}))

# examine missing values

policy_data.describe()

# look for implicitly missing values

policy_data.groupby(["state"])["year"].agg("count")

# drop missing values

policy_data = policy_data.dropna(subset = ["health_spend_per_cap"])

# adjust for inflation

import cpi

policy_data = policy_data.assign(

real_health_spend_per_cap = policy_data.apply(

lambda x: cpi.inflate(x.health_spend_per_cap, x.year, to=1996), axis = 1

),

log_real_health_spend_cap = lambda x: np.log(x.real_health_spend_per_cap)

)

policy_data

/Users/nickjenkins/opt/anaconda3/lib/python3.9/site-packages/IPython/core/interactiveshell.py:3444: DtypeWarning: Columns (36,485,495,496,497,498,499,500,501,502,505,511,513,516,518,521,522,525,532,537,539,541,543,544,671,843,844,1087,1093,1124,1318,1513,1619,1620,1890) have mixed types.Specify dtype option on import or set low_memory=False.

exec(code_obj, self.user_global_ns, self.user_ns)

| year | state | health_spend_per_cap | fin_incentive_ban | senate_prty_control | house_prty_control | house_ideology | senate_ideology | senate_dem_control | real_health_spend_per_cap | log_real_health_spend_cap | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 4641 | 1991 | Alabama | 2571.0000 | 0.0 | Democrat | Democrat | NaN | NaN | 0 | 2961.746696 | 7.993534 |

| 4642 | 1991 | Alaska | 2589.0000 | 0.0 | Split | Democrat | NaN | NaN | 0 | 2982.482379 | 8.000511 |

| 4643 | 1991 | Arizona | 2457.0000 | 0.0 | Democrat | Republican | NaN | NaN | 0 | 2830.420705 | 7.948181 |

| 4644 | 1991 | Arkansas | 2380.0000 | 0.0 | Democrat | Democrat | NaN | NaN | 0 | 2741.718062 | 7.916340 |

| 4645 | 1991 | California | 2677.0000 | 0.0 | Democrat | Democrat | NaN | NaN | 0 | 3083.856828 | 8.033936 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 5605 | 2009 | Virginia | 6286.0000 | 0.0 | Democrat | Republican | NaN | NaN | 0 | 4597.218195 | 8.433207 |

| 5606 | 2009 | Washington | 6782.0000 | 0.0 | Democrat | Democrat | -0.794 | -0.623 | 0 | 4959.964016 | 8.509154 |

| 5607 | 2009 | West Virginia | 75.5297 | 1.0 | Democrat | Democrat | NaN | NaN | 0 | 55.238070 | 4.011652 |

| 5608 | 2009 | Wisconsin | 7233.0000 | 0.0 | Democrat | Democrat | -0.798 | -0.880 | 0 | 5289.799429 | 8.573536 |

| 5609 | 2009 | Wyoming | 7040.0000 | 0.0 | Republican | Republican | 0.304 | 0.353 | 0 | 5148.650349 | 8.546490 |

969 rows × 11 columns

You might have noticed that I introduce a new operator here: lambda. The lambda operator is an annonoymous function that allows us to use the variables we create to calculate others. Check out this article to learn more: https://www.educba.com/pandas-lambda/.

For a real project, I would also save the cleaned data to my project’s working directory:

policy_data.to_csv("Policy Project/Data/policy_data.csv")

Step 3: Exploratory Data Analysis

Before running any models, it is important that you become an expert on your data. This is easy with a small dataset, but can get harder when you are working with a lot of variables. Here are some examples of questions you should ask about your data:

- What do the distributions of my variables look like?

- What are means and standard deviations?

- What is the relationship between variables?

Answering these questions will help you identify errors in your data - errors that could lead to erroneous regression results, help you understand what your data measures and the types of questions you can as, and also help you generate hypotheses.

There are essentially two ways of conducting EDA: descriptive methods and visual methods. We’ll explore both here.

Descriptive Methods

When exploring data descriptively, we are mainly interested in calculating various statistics over the data and possibly within groups. In general, to conduct descriptive EDA we need to know how to:

- Summarize and aggregate data

- Filter data

- Arrange data

Let’s see some examples of these.

Summarizing and Aggregating Data

With our interest in predicting healthcare spending, we might want to know what the average amount of healthcare spending is in the US. We’ve already seen how to do with the .describe() method, but we could also use .mean() and .median().

policy_data.real_health_spend_per_cap.mean()

3976.44511145127

policy_data.real_health_spend_per_cap.median()

3846.8745644599308

What if we want to know the average spending by state? To do that, we need to use .groupby() and .aggregate() methods to group the data into states, calculate the function we want within those states, and then show use the results for each state.

policy_data \

.groupby("state") \

.aggregate({"real_health_spend_per_cap": "mean"}) \

.head(5)

| real_health_spend_per_cap | |

|---|---|

| state | |

| Alabama | 3792.283564 |

| Alaska | 4502.528923 |

| Arizona | 3222.403098 |

| Arkansas | 3552.535751 |

| California | 3596.155894 |

How does House ideology differ by states?

policy_data \

.groupby("state") \

.aggregate({"house_ideology": "mean"}) \

.head(5)

| house_ideology | |

|---|---|

| state | |

| Alabama | 0.086357 |

| Alaska | 0.693143 |

| Arizona | 0.459429 |

| Arkansas | 0.231250 |

| California | -0.950059 |

Filtering Data

It’s important to be able to calculate statistics by groups, but sometimes we want to pull out specific values. For example, we might want to know which state had the highest amount of healthcare spending, or has the most liberal House. This is when we need filters. So, let’s see which state has the highest level of spending:

policy_data.loc[

policy_data.real_health_spend_per_cap == policy_data.real_health_spend_per_cap.max(),

["year", "state", "real_health_spend_per_cap"]

]

| year | state | real_health_spend_per_cap | |

|---|---|---|---|

| 5567 | 2009 | District of Columbia | 7568.662282 |

Which state has the highest average spending? We’ll need to use our .groupby() skills for that. The state with the highest average spending is:

policy_data \

.groupby("state") \

.aggregate({"real_health_spend_per_cap": "mean"}) \

.loc[:, "real_health_spend_per_cap"] \

.nlargest(n=1)

state

District of Columbia 6302.281066

Name: real_health_spend_per_cap, dtype: float64

What if we only wanted to look at the data for California? We can do that like this:

policy_data.loc[policy_data.state == "California", :]

| year | state | health_spend_per_cap | fin_incentive_ban | senate_prty_control | house_prty_control | house_ideology | senate_ideology | senate_dem_control | real_health_spend_per_cap | log_real_health_spend_cap | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 4645 | 1991 | California | 2677.0 | 0.0 | Democrat | Democrat | NaN | NaN | 0 | 3083.856828 | 8.033936 |

| 4696 | 1992 | California | 2847.0 | 0.0 | Democrat | Democrat | NaN | NaN | 0 | 3183.851033 | 8.065847 |

| 4747 | 1993 | California | 2948.0 | 0.0 | Democrat | Democrat | -0.968 | -0.720 | 0 | 3200.977163 | 8.071211 |

| 4798 | 1994 | California | 3008.0 | 0.0 | Democrat | Democrat | -0.968 | -0.720 | 0 | 3184.582996 | 8.066077 |

| 4849 | 1995 | California | 3069.0 | 0.0 | Democrat | Split | 0.377 | -0.773 | 0 | 3159.620079 | 8.058207 |

| 4900 | 1996 | California | 3144.0 | 1.0 | Democrat | Republican | 0.377 | -0.773 | 0 | 3144.000000 | 8.053251 |

| 4951 | 1997 | California | 3213.0 | 1.0 | Democrat | Democrat | -0.848 | -0.835 | 0 | 3140.932710 | 8.052275 |

| 5002 | 1998 | California | 3389.0 | 1.0 | Democrat | Democrat | -0.848 | -0.835 | 0 | 3262.172393 | 8.090149 |

| 5053 | 1999 | California | 3486.0 | 1.0 | Democrat | Democrat | -0.956 | -1.004 | 0 | 3283.033613 | 8.096523 |

| 5104 | 2000 | California | 3602.0 | 1.0 | Democrat | Democrat | -0.956 | -1.004 | 0 | 3281.961672 | 8.096197 |

| 5155 | 2001 | California | 3847.0 | 1.0 | Democrat | Democrat | -1.069 | -1.137 | 0 | 3408.211745 | 8.133943 |

| 5206 | 2002 | California | 4144.0 | 1.0 | Democrat | Democrat | -1.069 | -1.137 | 0 | 3614.194553 | 8.192624 |

| 5257 | 2003 | California | 4499.0 | 1.0 | Democrat | Democrat | -1.295 | -1.137 | 0 | 3836.375543 | 8.252283 |

| 5308 | 2004 | California | 4777.0 | 1.0 | Democrat | Democrat | -1.295 | -1.137 | 0 | 3967.767602 | 8.285959 |

| 5359 | 2005 | California | 5098.0 | 1.0 | Democrat | Democrat | -1.207 | -1.180 | 0 | 4095.628264 | 8.317675 |

| 5410 | 2006 | California | 5384.0 | 1.0 | Democrat | Democrat | -1.207 | -1.180 | 0 | 4190.226190 | 8.340510 |

| 5461 | 2007 | California | 5732.0 | 1.0 | Democrat | Democrat | -1.391 | -1.126 | 0 | 4337.523512 | 8.375059 |

| 5512 | 2008 | California | 6024.0 | 1.0 | Democrat | Democrat | -1.391 | -1.126 | 0 | 4389.932328 | 8.387069 |

| 5563 | 2009 | California | 6238.0 | 1.0 | Democrat | Democrat | -1.437 | -1.140 | 0 | 4562.113761 | 8.425541 |

What about all data for California just for the year 2000? For that we need to use the & operator and parentneses around each conditional:

policy_data.loc[

(policy_data.state == "California") & (policy_data.year == 2000),

:

]

| year | state | health_spend_per_cap | fin_incentive_ban | senate_prty_control | house_prty_control | house_ideology | senate_ideology | senate_dem_control | real_health_spend_per_cap | log_real_health_spend_cap | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 5104 | 2000 | California | 3602.0 | 1.0 | Democrat | Democrat | -0.956 | -1.004 | 0 | 3281.961672 | 8.096197 |

Arranging Data

Instead of returning only one value, like the maximum, you might want to sort the data to see multile values. We can do this with the .sort.values() method. Instead of finding the state with the highest average health spending, let’s list out average spending by all states and put them in decending order.

policy_data \

.groupby("state") \

.aggregate({"real_health_spend_per_cap" : "mean"}) \

.sort_values(by = ["real_health_spend_per_cap"]) \

.head(10)

| real_health_spend_per_cap | |

|---|---|

| state | |

| Utah | 2918.212534 |

| Arizona | 3222.403098 |

| Idaho | 3229.638758 |

| Nevada | 3406.427351 |

| Texas | 3481.811702 |

| Georgia | 3483.831267 |

| New Mexico | 3497.550297 |

| Virginia | 3511.702684 |

| Colorado | 3516.180834 |

| Arkansas | 3552.535751 |

This is almost what we want. The issue is that the default of .sort_values() is ascending order. To change that to descending order, we set the ascending argument = False like so:

policy_data \

.groupby("state") \

.aggregate({"real_health_spend_per_cap" : "mean"}) \

.sort_values(by = ["real_health_spend_per_cap"], ascending = False) \

.head(10)

| real_health_spend_per_cap | |

|---|---|

| state | |

| District of Columbia | 6302.281066 |

| Massachusetts | 5083.730091 |

| Connecticut | 4880.184476 |

| New York | 4844.236550 |

| Delaware | 4631.491237 |

| Rhode Island | 4599.037152 |

| Alaska | 4502.528923 |

| Pennsylvania | 4463.792502 |

| New Jersey | 4456.569432 |

| Maine | 4430.814738 |

Visual Methods

There are several tools for data visualization in Python. pandas has its own built-in plotting methods, but other popular libraries are matplotlib and seaborn. In this guide, I’ll mostly be using seaborn because it is one of the more modern visualization libraries. As always, we start by importing it:

%matplotlib inline

import matplotlib.pyplot as plt

plt.rcParams["figure.figsize"] = (20,10)

import seaborn as sns

Let’s start visualizing our data by looking at the distribution of real_health_spend_per_cap:

sns.displot(data = policy_data, x = "real_health_spend_per_cap")

<seaborn.axisgrid.FacetGrid at 0x7faf4e6124f0>

What if we wanted a density plot instead? Well, then we set kind equal to "kde".

sns.displot(data = policy_data, x = "real_health_spend_per_cap", kind = "kde")

<seaborn.axisgrid.FacetGrid at 0x7faf4e6124c0>

Let’s make a new density plot with our logged outcome variable (log_real_health_spend_per_cap) on the hue equal to "fin_incentive_ban":

sns.displot(data = policy_data.dropna(), x = "log_real_health_spend_cap", kind="kde", hue = "fin_incentive_ban")

<seaborn.axisgrid.FacetGrid at 0x7faf8a556100>

Notice that I also chained the .dropna() method onto the DataFrame. That’s because we only dropped the missing values of the outcome variable, so missing values still exist for other variables.

The distributions look fairly similar, and is seems that states with financial incentive bans spend a little bit more. A better way to compare these groups might be a box plot:

sns.boxplot(

data = policy_data.dropna(),

x = "fin_incentive_ban",

y = "log_real_health_spend_cap"

)

/Users/nickjenkins/opt/anaconda3/lib/python3.9/site-packages/pandas/io/formats/format.py:1429: FutureWarning: Index.ravel returning ndarray is deprecated; in a future version this will return a view on self.

for val, m in zip(values.ravel(), mask.ravel())

<AxesSubplot:xlabel='fin_incentive_ban', ylabel='log_real_health_spend_cap'>

Here it definitely looks like states with financial incentive bans spend more.

What does spending look like across states?

sns.boxplot(

data = policy_data.dropna(),

x = "log_real_health_spend_cap",

y = "state"

)

<AxesSubplot:xlabel='log_real_health_spend_cap', ylabel='state'>

If we order this plot it will be easier to understand. We want to order the states by health spending, se we need to add the .sort_values() method:

sns.boxplot(

data = policy_data.sort_values("health_spend_per_cap", ascending = False),

x = "log_real_health_spend_cap",

y = "state"

)

<AxesSubplot:xlabel='log_real_health_spend_cap', ylabel='state'>

We could add in fin_incentive_ban to see how financial incentive bans affect health spending in different states by mapping fin_incentive_ban to the hue argument:

sns.boxplot(

data = policy_data.sort_values("health_spend_per_cap", ascending = False),

x = "log_real_health_spend_cap",

y = "state",

hue = "fin_incentive_ban"

)

<AxesSubplot:xlabel='log_real_health_spend_cap', ylabel='state'>

This plot is a bit busy, but it stills shows that states with a financial incentive ban spend more. This is especially apparent for the states that have data before and after financial incentive bans.

How does health spending vary by political party and state? To do that, We’ll plot log_real_health_spend_per_cap on the house_prty_control on the

sns.catplot(

data = policy_data,

x = "house_prty_control",

y = "log_real_health_spend_cap",

col = "state",

col_wrap = 5,

kind = "box"

)

<seaborn.axisgrid.FacetGrid at 0x7faf0fa1c340>

It looks like Democrats generally spend more, but that’s not the case for every state.

As a final plot, let’s see how health spending changes over time. To make the plot look like we want, we need to set units = "state" and estimator = None:

sns.lineplot(

data = policy_data.dropna(),

x = "year",

y = "log_real_health_spend_cap",

units = "state",

estimator = None

)

<AxesSubplot:xlabel='year', ylabel='log_real_health_spend_cap'>

If we decided that we wanted to use this plot in a professional paper, we would need to clean it up a bit. To prevent this guide from getting longer, I’m going to quickly format the plot, but check out the resources listed above for more detailed explanations of plot customization.

sns.set_style("white")

line_plt = sns.lineplot(

data = policy_data.dropna(),

x = "year",

y = "log_real_health_spend_cap",

units = "state",

estimator = None,

size = 0.1,

legend = False,

color = "gray"

)

line_plt.set_title("Inflation-Adjusted Health Spending Over Time by State")

line_plt.set_xlabel("Year")

line_plt.set_ylabel("Real Health Spending Per Capita (Logged)")

line_plt.set_xticks(range(1993, 2010, 1))

line_plt.set_xlim(1993, 2010)

(1993.0, 2010.0)

And finally, we can save this figure with the .saveFig() method:

fig = line_plt.get_figure()

fig.savefig("line_plot.pdf")

Going Deeper: We can also make maps!

The process of making maps is fairly straightforward. We need the latitude and longitude for the places that we want to map, and those data need to be joined to the values that we want shown on the map. Let’s start by getting the latitude and longitude coordinates for states. We’ll get the geographic data from the U.S. Census (called a shapefile) and we’ll need to use geopandas to read it into Python.

import geopandas

states = geopandas.read_file("tl_2021_us_state.dbf")

states.head()

| REGION | DIVISION | STATEFP | STATENS | GEOID | STUSPS | NAME | LSAD | MTFCC | FUNCSTAT | ALAND | AWATER | INTPTLAT | INTPTLON | geometry | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 3 | 5 | 54 | 01779805 | 54 | WV | West Virginia | 00 | G4000 | A | 62266298634 | 489204185 | +38.6472854 | -080.6183274 | POLYGON ((-80.85847 37.42831, -80.85856 37.428... |

| 1 | 3 | 5 | 12 | 00294478 | 12 | FL | Florida | 00 | G4000 | A | 138961722096 | 45972570361 | +28.3989775 | -082.5143005 | MULTIPOLYGON (((-83.10874 24.62949, -83.10711 ... |

| 2 | 2 | 3 | 17 | 01779784 | 17 | IL | Illinois | 00 | G4000 | A | 143778561906 | 6216493488 | +40.1028754 | -089.1526108 | POLYGON ((-89.17208 37.06831, -89.17296 37.067... |

| 3 | 2 | 4 | 27 | 00662849 | 27 | MN | Minnesota | 00 | G4000 | A | 206232627084 | 18949394733 | +46.3159573 | -094.1996043 | POLYGON ((-92.74568 45.29604, -92.74629 45.295... |

| 4 | 3 | 5 | 24 | 01714934 | 24 | MD | Maryland | 00 | G4000 | A | 25151992308 | 6979074857 | +38.9466584 | -076.6744939 | POLYGON ((-75.76659 39.37756, -75.76630 39.373... |

To make the map, we just use the geopandas .plot() method:

states.plot()

<AxesSubplot:>

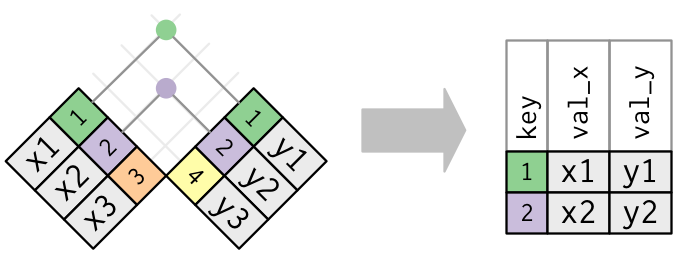

Now we need to merge this data with the policy_data so that we can show the states with financial incentive bans on the map. To do this, we join the tables by matching the variables that are common to both datasets. There are many ways to join two data sets, but we will use an inner join. Visually, it looks like this:

That is, the inner join retains the rows of data that match between the two data sets. With pandas we can impliment this with the .merge() method, setting the how = argument equal to "inner".

The only other caveat is that we have to tell Python the names of the columns to use to look for matches. In states the column we want is called NAME. In policy_data the column we want is called state. So, we set left_on = "NAME" and right_on = "state":

map_data = states \

.merge(policy_data, left_on = "NAME", right_on = "state", how = "inner")

map_data.head()

| REGION | DIVISION | STATEFP | STATENS | GEOID | STUSPS | NAME | LSAD | MTFCC | FUNCSTAT | ... | state | health_spend_per_cap | fin_incentive_ban | senate_prty_control | house_prty_control | house_ideology | senate_ideology | senate_dem_control | real_health_spend_per_cap | log_real_health_spend_cap | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 3 | 5 | 54 | 01779805 | 54 | WV | West Virginia | 00 | G4000 | A | ... | West Virginia | 2606.0 | 0.0 | Democrat | Democrat | NaN | NaN | 0 | 3002.066079 | 8.007056 |

| 1 | 3 | 5 | 54 | 01779805 | 54 | WV | West Virginia | 00 | G4000 | A | ... | West Virginia | 2858.0 | 0.0 | Democrat | Democrat | NaN | NaN | 0 | 3196.152530 | 8.069703 |

| 2 | 3 | 5 | 54 | 01779805 | 54 | WV | West Virginia | 00 | G4000 | A | ... | West Virginia | 3065.0 | 0.0 | Democrat | Democrat | NaN | NaN | 0 | 3328.017301 | 8.110132 |

| 3 | 3 | 5 | 54 | 01779805 | 54 | WV | West Virginia | 00 | G4000 | A | ... | West Virginia | 3463.0 | 0.0 | Democrat | Democrat | NaN | NaN | 0 | 3666.293522 | 8.206936 |

| 4 | 3 | 5 | 54 | 01779805 | 54 | WV | West Virginia | 00 | G4000 | A | ... | West Virginia | 3643.0 | 0.0 | Democrat | Democrat | NaN | NaN | 0 | 3750.568898 | 8.229663 |

5 rows × 26 columns

By merging policy_data to state, the resulting DataFrame is still a geopandas object, which is necessary:

type(map_data)

geopandas.geodataframe.GeoDataFrame

Now, let’s drop Alaska and Hawaii from the data and select the year that we want to make a map for. I’ll use 1996 since that is the first year that any state adopts a ban on financial incentives.

# drop Alaska and Hawaii

map_data = map_data \

.loc[(map_data.NAME != "Alaska") & (map_data.NAME != "Hawaii")] \

.loc[map_data.year == 1996]

map = map_data.plot(column = "fin_incentive_ban")

map.set_axis_off()

Step 4: Develop a Statistical Model and Analyze your Data

After thoroughly exploring our data, we’re ready to make some inferences. We’ll start with a simple linear regression to test the association between the financial incentive bans and health care spending.

Treatment Indicator Model

To test my research question, I will use the following statistical model:

$$\text{Health Spending}{i} = \alpha + \beta{1} \text{T}{i} + \epsilon{i}$$

where fin_incentive_ban). However, because I only want to test my research question for one year of data, we will need to limit our data. I’m doing this for pedagogical reasons. The goal of this guide is to walk you through a data project, and not so much how to apply advanced regression models.

We can fit the model in Python with the smf.ols() function and .fit() method provided by statsmodels package.

import statsmodels.api as sm

import statsmodels.formula.api as smf

model_01 = smf.ols(

"log_real_health_spend_cap ~ fin_incentive_ban",

data = policy_data.loc[policy_data.year == 1996]

)

fit_01 = model_01.fit()

print(fit_01.summary())

OLS Regression Results

=====================================================================================

Dep. Variable: log_real_health_spend_cap R-squared: 0.035

Model: OLS Adj. R-squared: 0.015

Method: Least Squares F-statistic: 1.737

Date: Thu, 21 Apr 2022 Prob (F-statistic): 0.194

Time: 17:04:32 Log-Likelihood: 39.098

No. Observations: 50 AIC: -74.20

Df Residuals: 48 BIC: -70.37

Df Model: 1

Covariance Type: nonrobust

============================================================================================

coef std err t P>|t| [0.025 0.975]

--------------------------------------------------------------------------------------------

Intercept 8.1031 0.017 486.410 0.000 8.070 8.137

fin_incentive_ban[T.1.0] 0.0776 0.059 1.318 0.194 -0.041 0.196

==============================================================================

Omnibus: 1.297 Durbin-Watson: 2.000

Prob(Omnibus): 0.523 Jarque-Bera (JB): 0.660

Skew: 0.249 Prob(JB): 0.719

Kurtosis: 3.262 Cond. No. 3.71

==============================================================================

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

Our model says that adopting policies that ban financial incentives for doctors increases a state’s per capita spending on health care by about

Now the model with controls:

$$\text{Health Spending}{i} = \alpha + \beta{1} \text{T}{i} + \beta \text{Dem House}{i} + \beta_{3} \text{Dem Senate}i + \beta{4} \text{Senate Ideo}{i} + \beta{5} \text{House Ideo}{i} + \epsilon{i}$$

model_02 = smf.ols(

"log_real_health_spend_cap ~ fin_incentive_ban + house_prty_control + senate_prty_control + house_ideology + senate_ideology",

data = policy_data.loc[policy_data.year == 1996]

)

fit_02 = model_02.fit()

print(fit_02.summary())

OLS Regression Results

=====================================================================================

Dep. Variable: log_real_health_spend_cap R-squared: 0.524

Model: OLS Adj. R-squared: 0.443

Method: Least Squares F-statistic: 6.429

Date: Thu, 21 Apr 2022 Prob (F-statistic): 0.000123

Time: 17:04:32 Log-Likelihood: 47.149

No. Observations: 42 AIC: -80.30

Df Residuals: 35 BIC: -68.13

Df Model: 6

Covariance Type: nonrobust

===================================================================================================

coef std err t P>|t| [0.025 0.975]

---------------------------------------------------------------------------------------------------

Intercept 8.1539 0.025 325.421 0.000 8.103 8.205

fin_incentive_ban[T.1.0] 0.0715 0.049 1.465 0.152 -0.028 0.171

house_prty_control[T.Split] 5.589e-16 1.57e-17 35.506 0.000 5.27e-16 5.91e-16

house_prty_control[T.Democrat] -0.0982 0.052 -1.900 0.066 -0.203 0.007

senate_prty_control[T.Split] -0.0912 0.096 -0.950 0.349 -0.286 0.104

senate_prty_control[T.Democrat] 0.0284 0.049 0.578 0.567 -0.071 0.128

house_ideology -0.2521 0.057 -4.450 0.000 -0.367 -0.137

senate_ideology 0.0769 0.056 1.372 0.179 -0.037 0.191

==============================================================================

Omnibus: 11.066 Durbin-Watson: 1.901

Prob(Omnibus): 0.004 Jarque-Bera (JB): 10.628

Skew: -1.066 Prob(JB): 0.00492

Kurtosis: 4.237 Cond. No. 9.35e+17

==============================================================================

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

[2] The smallest eigenvalue is 8.01e-35. This might indicate that there are

strong multicollinearity problems or that the design matrix is singular.

Notice how much the point estimate slightly decreases after including controls. Now the model is telling us that a when a state adopts policies that ban financial incentives for doctors increases a state’s per capita spending on health care by about 7.42 percent.

Let’s make a table for these results with stargazer.

from stargazer.stargazer import Stargazer

results_table = Stargazer([fit_01, fit_02])

results_table.rename_covariates(

{"fin_incentive_ban[T.1.0]": "Financial Incentive Ban",

"house_ideology": "House Ideology",

"house_prty_control[T.Democrat]": "Democrat Controlled House",

"house_prty_control[T.Split]": "Split Party Controlled House",

"senate_ideology": "Senate Ideology",

"senate_prty_control[T.Democrat]": "Democrat Controlled Senate",

"senate_prty_control[T.Split]": "Split Party Controlled Senate"}

)

results_table

| Dependent variable:log_real_health_spend_cap | ||

| (1) | (2) | |

| Intercept | 8.103*** | 8.154*** |

| (0.017) | (0.025) | |

| Financial Incentive Ban | 0.078 | 0.072 |

| (0.059) | (0.049) | |

| House Ideology | -0.252*** | |

| (0.057) | ||

| Democrat Controlled House | -0.098* | |

| (0.052) | ||

| Split Party Controlled House | 0.000*** | |

| (0.000) | ||

| Senate Ideology | 0.077 | |

| (0.056) | ||

| Democrat Controlled Senate | 0.028 | |

| (0.049) | ||

| Split Party Controlled Senate | -0.091 | |

| (0.096) | ||

| Observations | 50 | 42 |

| R2 | 0.035 | 0.524 |

| Adjusted R2 | 0.015 | 0.443 |

| Residual Std. Error | 0.113 (df=48) | 0.086 (df=35) |

| F Statistic | 1.737 (df=1; 48) | 6.429*** (df=6; 35) |

| Note: | *p<0.1; **p<0.05; ***p<0.01 | |

Differences-in-differences Model

With differences-in-differences estimation, our goal is to control for differences in the treatment and control groups by looking only at how they change over time. A simple estimation of this uses this formula:

$$ \text{DiD Estimate} = \text{E}[Y^{after}{treated} - Y^{after}{control}] - \text{E}[Y^{before}{treated} - Y^{before}{control}] $$

This gives us the difference between the treatment and control groups after the intervention ($\text{E}[Y^{after}{treated} - Y^{after}{control}]

Before building the model, however, we are going to restrict our data to just six states. Three that adopted the policy in 1996 (California, Florida, and Maryland) and three that never adopted the policy (Virginia, Colorado, and Washington). This will make things easier, as I will explain later. Note that the | operator means “or.”

diff_data = policy_data \

.loc[

(policy_data.state == "California") | (policy_data.state == "Colorado") |

(policy_data.state == "Florida") | (policy_data.state == "Maryland") |

(policy_data.state == "Washington") | (policy_data.state == "Virginia")

]

Now let’s add a variable to identify which states are affected by the treatment and the first year that they received the treatment.

diff_data = diff_data \

.assign(treatment_group = np.where(

(diff_data.state == "California") | (diff_data.state == "Florida") |

(diff_data.state == "Maryland"), 1, 0

))

Now we have a variable named treatment_group that tells us which states are in the treatment group.

Let’s review the important elements of our dataset. We have a column called fin_incentive_ban that tells us the year when states in the treatment group received the treatment and we have a column called treatment_group that tells us which states are in the treatment group and which are in the control group.

I limited my data to just California and Colorado, because California adopts the treatment in 1996, but Colorado never does. We could include all states, but that introduces complexity since not every state adopts the treatment in the same year. This leads to what is called a staggered difference in difference design. There have been a plethora of papers published on staggered difference in difference designs in the last 5 years - so these methods are rapidly changing. See How much should we trust staggered difference-in-differences estimates? as a primer for how they work and their potential problems. Instead of exploring that, I’m just going to compare two states.

Before we calculate the DiD estimate, and since we have multiple pre-treatment periods, let’s check the parallel trends assumption:

trends_plt = sns.lineplot(

data = diff_data,

x = "year",

y = "log_real_health_spend_cap",

hue = "fin_incentive_ban",

style = "state",

units = "state",

estimator = None

)

trends_plt.set_xlabel("Year")

trends_plt.set_ylabel("Real Health Spending per Capita")

trends_plt.set_xticks(range(1992, 2009, 1))

trends_plt.set_xlim(1993, 2010)

trends_plt.axvline(x = 1996, color = "black", linestyle = "--")

trends_plt.annotate("Policy Adoption" , xy = (1996.5, 8.5))

Text(1996.5, 8.5, 'Policy Adoption')

Those trends really aren’t parallel. Why does it matter? Well, if health spending in California was already trending up, as compared to Texas, before the treatment began then any differences after the treatment are probably due to whatever was causing those previous trends. In fact, this plot shows that after California adopts the treatment, both stats follow similar trends. This indicates that the policy doesn’t really affect health spending.

Now we’re ready to calculate the DiD estimate:

diff_data \

.groupby(["treatment_group", "fin_incentive_ban"]) \

.aggregate({"log_real_health_spend_cap" : "mean"})

| log_real_health_spend_cap | ||

|---|---|---|

| treatment_group | fin_incentive_ban | |

| 0 | 0.0 | 8.170823 |

| 1.0 | NaN | |

| 1 | 0.0 | 8.123392 |

| 1.0 | 8.334788 |

Row one shows the mean health spending for the control group before the treatment period, row three shows the mean health spending for the treatment group before the treatment period, and row four shows the mean health spending for the treatment group after the treatment takes place. Why did we get a NaN value in row two? Go back and look through data for members of the control group. What is .loc[fin_incentive_ban == "Yes"] for states in the control group?

There are no states in the control group that have a value of “Yes” in the fin_incentive_ban column. That means that we can’t calculate

How can we fix this? All we have to do is create a column that tells us when the treatment period starts (which is 1996 for our data):

diff_data = diff_data \

.assign(treatment_period = np.where(diff_data.year >= 1996, 1, 0))

Now let’s look at California and Colorado, a treatment and control members, to see what is going with these columns:

diff_data \

.loc[:, ["year", "state", "fin_incentive_ban", "treatment_group", "treatment_period"]] \

.sort_values(["state", "year"])

| year | state | fin_incentive_ban | treatment_group | treatment_period | |

|---|---|---|---|---|---|

| 4645 | 1991 | California | 0.0 | 1 | 0 |

| 4696 | 1992 | California | 0.0 | 1 | 0 |

| 4747 | 1993 | California | 0.0 | 1 | 0 |

| 4798 | 1994 | California | 0.0 | 1 | 0 |

| 4849 | 1995 | California | 0.0 | 1 | 0 |

| ... | ... | ... | ... | ... | ... |

| 5402 | 2005 | Washington | 0.0 | 0 | 1 |

| 5453 | 2006 | Washington | 0.0 | 0 | 1 |

| 5504 | 2007 | Washington | 0.0 | 0 | 1 |

| 5555 | 2008 | Washington | 0.0 | 0 | 1 |

| 5606 | 2009 | Washington | 0.0 | 0 | 1 |

114 rows × 5 columns

For California, it doesn’t look like there is any difference between the fin_incentive_ban and treatment_period columns. But for Colorado, treatment_period gets a value of 1 in 1996 even though Colorado never receives the treatment.

With the treatment_group and the treatment_period columns, we have all the ingredients that we need. Now let’s finish calculating the post-treatment differences and the DiD estimate.

diff_values = diff_data \

.groupby(["treatment_group", "treatment_period"]) \

.aggregate({"log_real_health_spend_cap": "mean"}) \

.sort_values("treatment_period") \

.reset_index()

diff_values

| treatment_group | treatment_period | log_real_health_spend_cap | |

|---|---|---|---|

| 0 | 0 | 0 | 7.980795 |

| 1 | 1 | 0 | 8.123392 |

| 2 | 0 | 1 | 8.238691 |

| 3 | 1 | 1 | 8.334788 |

# DiD estimate

(diff_values.iloc[3, 2] - diff_values.iloc[2, 2]) - (diff_values.iloc[1, 2] - diff_values.iloc[0, 2])

-0.046498870741971565

Looks like the treatment has a negative effect on the log of health spending per capita. Specifically, when a state adopts policies that ban financial incentives for doctors decreases a state’s per capita spending on health care by about 6 percentage points.

It’s great that we have the DiD estimate, but if we want to conduct a hypothesis test we’ll need to calculate its standard errors. The easiest way to do this is to run a regression. The specification we need to use is this:

$$ \log(\text{Health Spending}){it} = \alpha + \beta{1} \text{T}{it} + \beta{2} \text{G}{it} + \beta{3}\text{T}{it} \times \text{G}{it} + \epsilon_{it} $$

where treatment_period, treatment_group, and treatment_period and treatment_group (this is the DiD estimate). Let’s run it!

did_model = smf.ols(

"log_real_health_spend_cap ~ treatment_period * treatment_group",

data = diff_data

)

did_fit = did_model.fit()

print(did_fit.summary())

OLS Regression Results

=====================================================================================

Dep. Variable: log_real_health_spend_cap R-squared: 0.451

Model: OLS Adj. R-squared: 0.436

Method: Least Squares F-statistic: 30.08

Date: Thu, 21 Apr 2022 Prob (F-statistic): 2.80e-14

Time: 17:04:33 Log-Likelihood: 71.446

No. Observations: 114 AIC: -134.9

Df Residuals: 110 BIC: -123.9

Df Model: 3

Covariance Type: nonrobust

====================================================================================================

coef std err t P>|t| [0.025 0.975]

----------------------------------------------------------------------------------------------------

Intercept 7.9808 0.034 234.830 0.000 7.913 8.048

treatment_period 0.2579 0.040 6.514 0.000 0.179 0.336

treatment_group 0.1426 0.048 2.967 0.004 0.047 0.238

treatment_period:treatment_group -0.0465 0.056 -0.830 0.408 -0.157 0.064

==============================================================================

Omnibus: 2.650 Durbin-Watson: 0.434

Prob(Omnibus): 0.266 Jarque-Bera (JB): 1.883

Skew: -0.118 Prob(JB): 0.390

Kurtosis: 2.416 Cond. No. 9.61

==============================================================================

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

Check out the estimate for our interaction treatment_period:treatment_group! Exactly what we calculated. Unfortunately, however, the treatment doesn’t seem to have any effect. This is not a great differences in difference model because the assumptions don’t hold, but hopefully it showed you how diff-in-diff estimation works. Let’s add this model to our results table.

results_table = Stargazer([fit_01, fit_02, did_fit])

results_table.rename_covariates(

{"fin_incentive_ban[T.1.0]": "Financial Incentive Ban",

"house_ideology": "House Ideology",

"house_prty_control[T.Democrat]": "Democrat Controlled House",

"house_prty_control[T.Split]": "Split Party Controlled House",

"senate_ideology": "Senate Ideology",

"senate_prty_control[T.Democrat]": "Democrat Controlled Senate",

"senate_prty_control[T.Split]": "Split Party Controlled Senate",

"treatment_period:treatment_group": "Diff-in-Diff Estimate",

"treatment_group": "Treatment Group",

"treatment_period": "Treatment Period"}

)

results_table

| Dependent variable:log_real_health_spend_cap | |||

| (1) | (2) | (3) | |

| Intercept | 8.103*** | 8.154*** | 7.981*** |

| (0.017) | (0.025) | (0.034) | |

| Financial Incentive Ban | 0.078 | 0.072 | |

| (0.059) | (0.049) | ||

| House Ideology | -0.252*** | ||

| (0.057) | |||

| Democrat Controlled House | -0.098* | ||

| (0.052) | |||

| Split Party Controlled House | 0.000*** | ||

| (0.000) | |||

| Senate Ideology | 0.077 | ||

| (0.056) | |||

| Democrat Controlled Senate | 0.028 | ||

| (0.049) | |||

| Split Party Controlled Senate | -0.091 | ||

| (0.096) | |||

| Treatment Group | 0.143*** | ||

| (0.048) | |||

| Treatment Period | 0.258*** | ||

| (0.040) | |||

| Diff-in-Diff Estimate | -0.046 | ||

| (0.056) | |||

| Observations | 50 | 42 | 114 |

| R2 | 0.035 | 0.524 | 0.451 |

| Adjusted R2 | 0.015 | 0.443 | 0.436 |

| Residual Std. Error | 0.113 (df=48) | 0.086 (df=35) | 0.132 (df=110) |

| F Statistic | 1.737 (df=1; 48) | 6.429*** (df=6; 35) | 30.082*** (df=3; 110) |

| Note: | *p<0.1; **p<0.05; ***p<0.01 | ||

Summary